Ahead of tomorrow’s joint event of The Talmud Blog and Digital Humanities Israel on “Open digital Bible, Mishna and Talmud,” Hayim Lapin of the University of Maryland has written this guest-post on The Digital Mishnah Project. Feel free to leave Hayim your feedback in the comments section below or on the project’s site.

I am pleased to formally announce the relocation of my project to a server at the University of Maryland. I would also like to thank the Talmud Blog for hosting this guest post, which will also appear on my project blog at blog.umd.edu/digitalmishnah. The transition to the new site is not entirely complete, but it is complete enough to talk about it here.

In this post, I’d like to describe the project, give a brief user’s guide, and talk about next steps. At Yitz Landes’s suggestion I’ve also provided a PDF of my paper for the Peter Schäfer festschrift on this topic.

1. The project to date

The project initially was conceived as a side project to an annotated translation of the Mishnah that I am editing with Shaye Cohen (Harvard) and Bob Goldenberg (SUNY Stony Brook, emer.), under contract with Oxford, for which I am also contributing translation and annotations for tractate Neziqin, or Bava Qamma, Bava Metsi’a, and Bava Batra. Since I was going to spend time looking at manuscript variants anyway, I reasoned, why not just work more systematically, and develop a digital edition. Much more complicated than I imagined!

The demo as it is available today on the development server is not significantly different in functionality from what was available in April 2013. However, there is now much more text available. While all the texts need further editing (including for encoding errors that interfere with output), there is enough available to get a very good start at a critical edition (or at least a variorum edition) of tractate Neziqin including several Genizah fragments, with an emphasis on transcribing fragments that constitute joinable sections of larger manuscripts.

2. User’s guide

When you access the demo edition, you will have two choices: “Browse” and “Compare.”

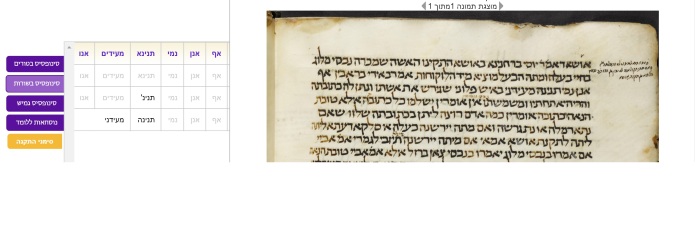

Following the link to the Browse page gives you the list of transcribed witnesses. Selecting one will allow you to browse through the manuscript page by page, column by column, or chapter by chapter with a more compact display of the text.

When you first get to the Compare page, all the witnesses installed to date are displayed and the interactive form is not yet activated [bad design alert!]. It will only be activated when you use the collapsing menus on the left to select text at the Chapter or Mishnah level. You are welcome to poke around and see where there are pieces of text (typically based on Genizah fragments) outside of the Bavot. However, significant amounts of text and of witnesses are only available for these three tractates.

To compare texts, select order (Neziqin) > tractate (one of the Bavot) > chapter (1-10) > mishnah (any one, or whole chapter).

The system will limit the table to those witnesses that have text for the selected passage and enable the table. At that point, you can select witnesses by putting a numeral into the text field. The output will be sorted based on that number. Then select the Compare button on the right. Output will appear below the form, and you may need to scroll down to it.

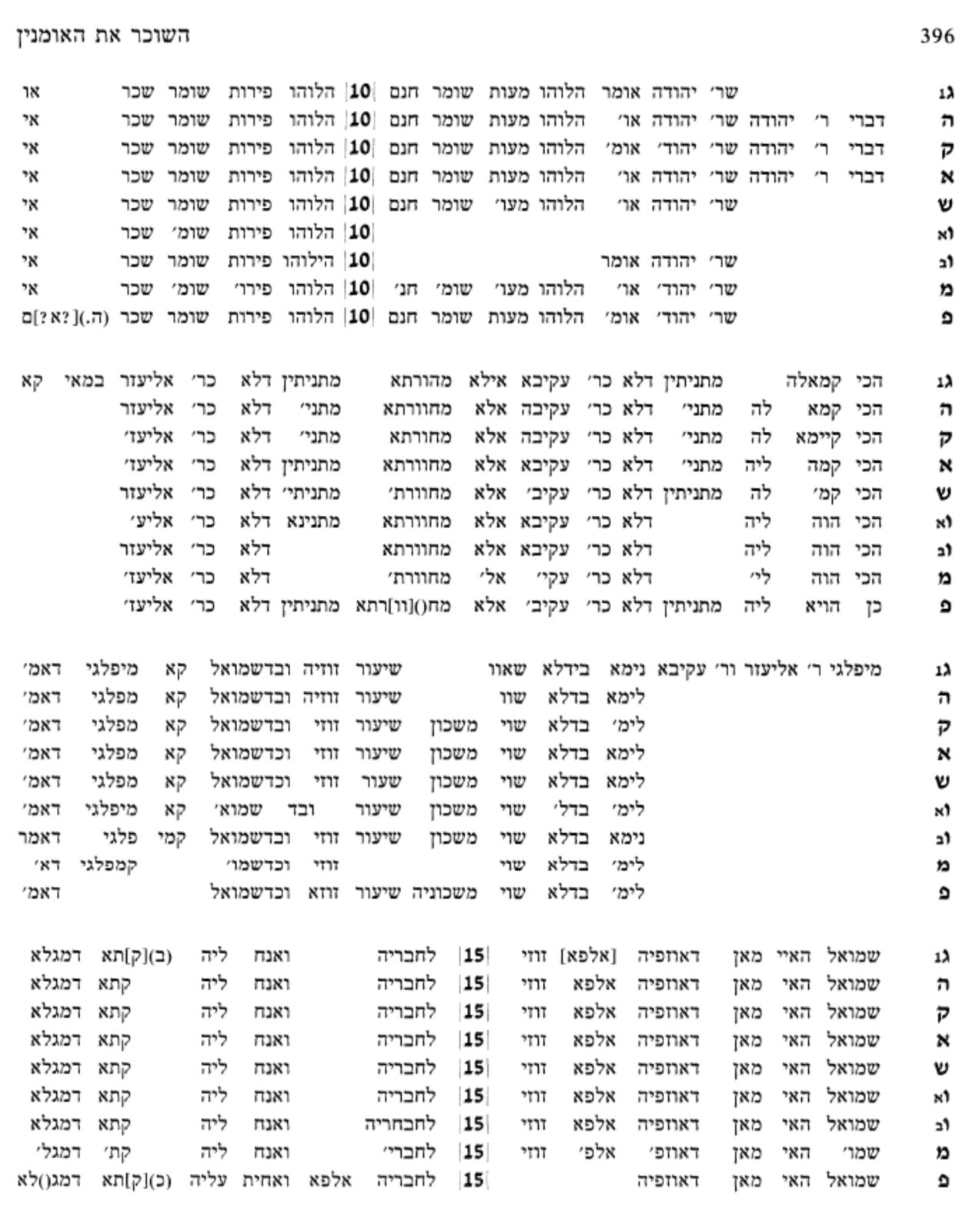

There are three output options. By default, you get an alignment in tabular form. Also possible are a text and apparatus (really, just a proof of concept) and a pretty featureless presentation in parallel columns.

3. Desired features

Here are a number of features I would like to see. I would also be glad to hear about additional features that potential users would find useful.

- Highlighting of orthographic and/or substantive variants in alignment table view. (Current highlighting uses CollateX’s own output, and there appear to be some errors. In addition to correcting these errors, it would be useful to highlight and color code types of variation.)

- In parallel column view, selecting one text highlights the corresponding text in the other columns.

- Downloading results (excel, TEI parallel segmentation, etc.).

- Quantitative analysis (distance, grouping, stemmatics)

- Correcting output. The preceding features are only as useful as the alignment is good. While down the road, the project will be able to generate an edited alignment table that will obviate the on the fly collation that is currently offered, for the present, users need a way of adjusting the output to reflect what a human eye and brain (carbon-based liveware) can see. At a minimum, this corrected alignment should be available to be reprocessed in the various output and analysis options.

- Morphological tagging. This is something that Daniel Stoekl ben Ezra and I have been working on (see below).

4. Behind the scenes

For those interested in what is happening behind the scenes, here is a brief description.

The application is built in cocoon. The source code is available at https://github.com/umd-mith/mishnah.

The texts are transcribed and encoded in TEI, a specification of XML developed for textual editing. The transcriptions aim at fairly detailed representation of textual and codicological features, so that the database might be useful also for those interested in the history of the book.

For the Browse function, the system selects the requested source, citation, and transforms the base XML into HTML (using XSLT), and presents it on the monitor.

For the Compare function, the system extracts the selected text from the selected witnesses, passes it through the CollateX program, and then transforms the result into HTML using XSLT. The process is actually somewhat more complicated, since in order to get good alignment a certain amount of massaging is required before passing the information into CollateX, and the output requires then requires some further handling. The text is tokenized (broken into words as comparison units), the tokens are regularized (all abbreviations are expanded, matres lectionis are removed, final aleph is treated as final heh, etc.). CollateX then aligns the regularized tokens, but the output needs to be re-merged with the full tokens. This re-merged text is what is presented in the output.

Ideally, the transcriptions would be done directly into TEI using an XML editor. (Oxygen now has fairly good support for right-to-left editing in “author” mode.) In practice, and especially when supervising long distance, it is easier for transcribers to work in Word. I have developed a set of Word macros that allow transcribers to do the kind of full inputting of data the project requires, and an XSLT transformation to transform the word document from Microsoft’s Open XML to TEI, with a certain amount of post-processing required.

5. Technical next steps

Both for internal processing and in order to align this project more closely with that of Daniel Stoekl ben Ezra (with whom I have been collaborating), the XML schema that governs how to do the markup will need to be changed, so that each word has its own unique address (@xml:id). This also means that tags that can straddle others (say, damage that extends from the end of one text to the beginning of another) will need to be revised. Once I am revising the schema, it will be useful to tighten it, and limit the values that can be used for attributes. This will make it easier for transcribers to work directly in XML.

Encoding of Genizah fragments pose a particular set of problems. (1) We need a method for virtually joining fragments that belong to a single manuscript while also retaining the integrity of the original fragment. At present, each fragment is encoded separately and breaks have pointers to locations in a central reference text. A procedure is necessary to then process the texts and generate a composite. At present this is envisioned with texts that are known to join. At a later stage the approach could be generalized to search for possible joins. (2) Fragmentary texts in general pose a problem in alignment, since we need to distinguish textual absences from physical gaps due to preservation for the alignment program. The system of pointing described in (1) will facilitate this.

The above are necessary to make the present version of the demo function effectively. Toward a next phase, one essential feature is the correction of the alignment output (see also above). I can envision two use cases. In one, the individual user makes corrections for his or her own use, and the edition does not provide a “curated” alignment. Alternatively, we build a content management system that allows the editors to oversee the construction of corrected alignments that become part of the application. When completed, this “curated” version replaces the collation that takes place on the fly. In either case, corrected output makes possible a suite of statistical functions that I would like to implement.

Finally, for our proposed model digital edition, Daniel Stoekl ben Ezra and I have discussed a morphological tagging component. Here we have worked with Meni Adler (BGU) to create preliminary morphological analysis based on modern Hebrew and with a programmer to create a markup tool. Ideally, the corrected markup could then be recycled to train morphological analysis programs on rabbinic Hebrew and Medieval/Early Modern orthography.

I have benefited from the support of the Meyerhoff Center for Jewish Studies, the History Department, and the Maryland Institute for Technology in the Humanities (MITH). MITH, and in particular then assistant director Travis Brown, built the web application and worked with me as I slowly and painfully learned how to build the parts that I built directly. Trevor Muñoz of MITH was insistent that I develop a schema and helped to do so, the wisdom of which I am only now learning. Many professional transcribers and students worked on transcriptions and markup. These are listed in the file ref.xml available in the project repository, and I hope to make an honor roll more visible at a later date.

Many scholars and institutions have been gracious about sharing texts and information. Michael Krupp shared transcriptions of the first four orders of the Mishnah. Peter Schaefer and Gottfried Reeg made available the Mishnah texts from all editions of the Yerushalmi included in the Synopsis. Accordance, through the good offices of Marty Abegg, made pointed transcriptions of the Kaufmann manuscript available. Daniel Stoekl ben Ezra and I have been collaborating for some time now, and have proposed a jointly edited model edition of Bava Metsi’a pending funding.